Vector Space Model on:

[Wikipedia]

[Google]

[Amazon]

Vector space model or term vector model is an algebraic model for representing text documents (and any objects, in general) as vectors of identifiers (such as index terms). It is used in

G. Salton , A. Wong , C. S. Yang, A vector space model for automatic indexing

Communications of the ACM, v.18 n.11, p.613–620, Nov. 1975 the term-specific weights in the document vectors are products of local and global parameters. The model is known as term frequency-inverse document frequency model. The weight vector for document ''d'' is , where : and * is term frequency of term ''t'' in document ''d'' (a local parameter) * is inverse document frequency (a global parameter). is the total number of documents in the document set; is the number of documents containing the term ''t''.

Some experiments in the generation of word and document associations

''Proceeding AFIPS '62 (Fall) Proceedings of the December 4–6, 1962, fall joint computer conference'', pages 234–250. ''(Early paper of Salton using the term-document matrix formalization)'' * G. Salton, A. Wong, and C. S. Yang (1975),

A Vector Space Model for Automatic Indexing

''Communications of the ACM'', vol. 18, nr. 11, pages 613–620. ''(Article in which a vector space model was presented)'' * David Dubin (2004)

The Most Influential Paper Gerard Salton Never Wrote

''(Explains the history of the Vector Space Model and the non-existence of a frequently cited publication)''

* ttp://nlp.stanford.edu/IR-book/html/htmledition/vector-space-classification-1.html Relationship of vector space search to the "k-Nearest Neighbor" search

information filtering An information filtering system is a system that removes redundant or unwanted information from an information stream using (semi)automated or computerized methods prior to presentation to a human user. Its main goal is the management of the infor ...

, information retrieval

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other co ...

, index

Index (or its plural form indices) may refer to:

Arts, entertainment, and media Fictional entities

* Index (''A Certain Magical Index''), a character in the light novel series ''A Certain Magical Index''

* The Index, an item on a Halo megastru ...

ing and relevancy rankings. Its first use was in the SMART Information Retrieval System The SMART (System for the Mechanical Analysis and Retrieval of Text) Information Retrieval System is an information retrieval system developed at Cornell University in the 1960s. Many important concepts in information retrieval were developed as par ...

.

Definitions

Documents and queries are represented as vectors. : : Eachdimension

In physics and mathematics, the dimension of a Space (mathematics), mathematical space (or object) is informally defined as the minimum number of coordinates needed to specify any Point (geometry), point within it. Thus, a Line (geometry), lin ...

corresponds to a separate term. If a term occurs in the document, its value in the vector is non-zero. Several different ways of computing these values, also known as (term) weights, have been developed. One of the best known schemes is tf-idf weighting (see the example below).

The definition of ''term'' depends on the application. Typically terms are single words, keyword

Keyword may refer to:

Computing

* Keyword (Internet search), a word or phrase typically used by bloggers or online content creator to rank a web page on a particular topic

* Index term, a term used as a keyword to documents in an information syst ...

s, or longer phrases. If words are chosen to be the terms, the dimensionality of the vector is the number of words in the vocabulary (the number of distinct words occurring in the corpus

Corpus is Latin for "body". It may refer to:

Linguistics

* Text corpus, in linguistics, a large and structured set of texts

* Speech corpus, in linguistics, a large set of speech audio files

* Corpus linguistics, a branch of linguistics

Music

* ...

).

Vector operations can be used to compare documents with queries.

Applications

Relevance

Relevance is the concept of one topic being connected to another topic in a way that makes it useful to consider the second topic when considering the first. The concept of relevance is studied in many different fields, including cognitive sci ...

ranking

A ranking is a relationship between a set of items such that, for any two items, the first is either "ranked higher than", "ranked lower than" or "ranked equal to" the second.

In mathematics, this is known as a weak order or total preorder of o ...

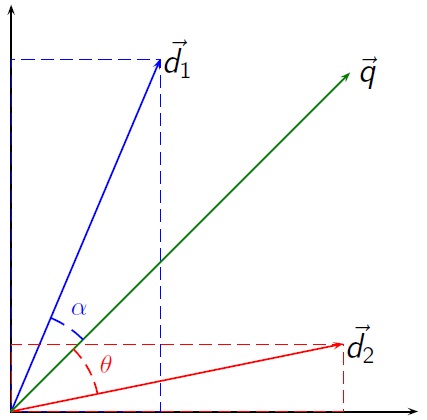

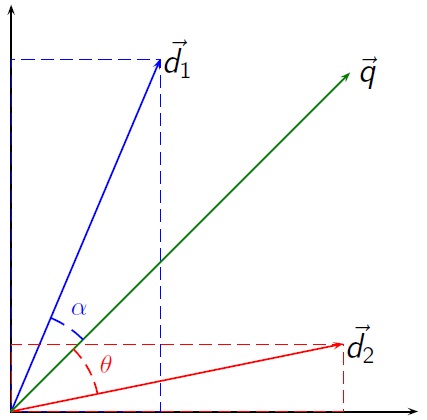

s of documents in a keyword search can be calculated, using the assumptions of document similarities theory, by comparing the deviation of angles between each document vector and the original query vector where the query is represented as a vector with same dimension as the vectors that represent the other documents.

In practice, it is easier to calculate the cosine of the angle between the vectors, instead of the angle itself:

:

Where is the intersection (i.e. the dot product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an algebra ...

) of the document (d2 in the figure to the right) and the query (q in the figure) vectors, is the norm of vector d2, and is the norm of vector q. The norm

Naturally occurring radioactive materials (NORM) and technologically enhanced naturally occurring radioactive materials (TENORM) consist of materials, usually industrial wastes or by-products enriched with radioactive elements found in the envi ...

of a vector is calculated as such:

:

Using the cosine the similarity between document ''dj'' and query ''q'' can be calculated as:

:

As all vectors under consideration by this model are element-wise nonnegative, a cosine value of zero means that the query and document vector are orthogonal

In mathematics, orthogonality is the generalization of the geometric notion of ''perpendicularity''.

By extension, orthogonality is also used to refer to the separation of specific features of a system. The term also has specialized meanings in ...

and have no match (i.e. the query term does not exist in the document being considered). See cosine similarity

In data analysis, cosine similarity is a measure of similarity between two sequences of numbers. For defining it, the sequences are viewed as vectors in an inner product space, and the cosine similarity is defined as the cosine of the angle betw ...

for further information.

Term frequency-inverse document frequency weights

In the classic vector space model proposed by Salton, Wong and YangCommunications of the ACM, v.18 n.11, p.613–620, Nov. 1975 the term-specific weights in the document vectors are products of local and global parameters. The model is known as term frequency-inverse document frequency model. The weight vector for document ''d'' is , where : and * is term frequency of term ''t'' in document ''d'' (a local parameter) * is inverse document frequency (a global parameter). is the total number of documents in the document set; is the number of documents containing the term ''t''.

Advantages

The vector space model has the following advantages over the Standard Boolean model: #Simple model based on linear algebra #Term weights not binary #Allows computing a continuous degree of similarity between queries and documents #Allows ranking documents according to their possible relevance #Allows partial matching Most of these advantages are a consequence of the difference in the density of the document collection representation between Boolean and term frequency-inverse document frequency approaches. When using Boolean weights, any document lies in a vertex in a n-dimensionalhypercube

In geometry, a hypercube is an ''n''-dimensional analogue of a square () and a cube (). It is a closed, compact, convex figure whose 1- skeleton consists of groups of opposite parallel line segments aligned in each of the space's dimensions, ...

. Therefore, the possible document representations are and the maximum Euclidean distance between pairs is . As documents are added to the document collection, the region defined by the hypercube's vertices become more populated and hence denser. Unlike Boolean, when a document is added using term frequency-inverse document frequency weights, the inverse document frequencies of the terms in the new document decrease while that of the remaining terms increase. In average, as documents are added, the region where documents lie expands regulating the density of the entire collection representation. This behavior models the original motivation of Salton and his colleagues that a document collection represented in a low density region could yield better retrieval results.

Limitations

The vector space model has the following limitations: #Long documents are poorly represented because they have poor similarity values (a smallscalar product

In mathematics, the dot product or scalar productThe term ''scalar product'' means literally "product with a scalar as a result". It is also used sometimes for other symmetric bilinear forms, for example in a pseudo-Euclidean space. is an algebra ...

and a large dimensionality)

#Search keywords must precisely match document terms; word substring

In formal language theory and computer science, a substring is a contiguous sequence of characters within a string. For instance, "''the best of''" is a substring of "''It was the best of times''". In contrast, "''Itwastimes''" is a subsequenc ...

s might result in a "false positive

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result ...

match"

#Semantic sensitivity; documents with similar context but different term vocabulary won't be associated, resulting in a "false negative

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result ...

match".

#The order in which the terms appear in the document is lost in the vector space representation.

#Theoretically assumes terms are statistically independent.

#Weighting is intuitive but not very formal.

Many of these difficulties can, however, be overcome by the integration of various tools, including mathematical techniques such as singular value decomposition

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any \ m \times n\ matrix. It is related ...

and lexical database

In digital lexicography, natural language processing, and digital humanities, a lexical resource is a language resource consisting of data regarding the lexemes of the lexicon of one or more languages e.g., in the form of a database.

Character ...

s such as WordNet

WordNet is a lexical database of semantic relations between words in more than 200 languages. WordNet links words into semantic relations including synonyms, hyponyms, and meronyms. The synonyms are grouped into '' synsets'' with short definition ...

.

Models based on and extending the vector space model

Models based on and extending the vector space model include: *Generalized vector space model

The Generalized vector space model is a generalization of the vector space model used in information retrieval. Wong ''et al.'' presented an analysis of the problems that the pairwise orthogonality assumption of the vector space model (VSM) creat ...

* Latent semantic analysis

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the do ...

* Term

Term may refer to:

* Terminology, or term, a noun or compound word used in a specific context, in particular:

**Technical term, part of the specialized vocabulary of a particular field, specifically:

***Scientific terminology, terms used by scient ...

* Rocchio Classification

* Random indexing Random indexing is a dimensionality reduction method and computational framework for distributional semantics, based on the insight that very-high-dimensional vector space model implementations are impractical, that models need not grow in dimension ...

Software that implements the vector space model

The following software packages may be of interest to those wishing to experiment with vector models and implement search services based upon them.Free open source software

*Apache Lucene

Apache Lucene is a free and open-source search engine software library, originally written in Java by Doug Cutting. It is supported by the Apache Software Foundation and is released under the Apache Software License. Lucene is widely used as ...

. Apache Lucene is a high-performance, open source, full-featured text search engine library written entirely in Java.

* OpenSearch (software)

OpenSearch is a family of software consisting of a search engine (also named OpenSearch), and ''OpenSearch Dashboards'', a data visualization dashboard for that search engine. The software started in 2021 as a fork of Elasticsearch and Kibana, ...

and Solr

Solr (pronounced "solar") is an open-source enterprise-search platform, written in Java. Its major features include full-text search, hit highlighting, faceted search, real-time indexing, dynamic clustering, database integration, NoSQL features ...

: the 2 most famous search engine software (many smaller exist) based on Lucene.

* Gensim

Gensim is an open-source library for unsupervised topic modeling, document indexing, retrieval by similarity, and other natural language processing functionalities, using modern statistical machine learning.

Gensim is implemented in Python and ...

is a Python+ NumPy framework for Vector Space modelling. It contains incremental (memory-efficient) algorithms for term frequency-inverse document frequency, Latent Semantic Indexing

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the do ...

, Random Projections

In mathematics and statistics, random projection is a technique used to reduce the dimensionality of a set of points which lie in Euclidean space. Random projection methods are known for their power, simplicity, and low error rates when compared ...

and Latent Dirichlet Allocation

In natural language processing, Latent Dirichlet Allocation (LDA) is a generative statistical model that explains a set of observations through unobserved groups, and each group explains why some parts of the data are similar. The LDA is an ex ...

.

* Weka

The weka, also known as the Māori hen or woodhen (''Gallirallus australis'') is a flightless bird species of the rail family. It is endemic to New Zealand. It is the only extant member of the genus '' Gallirallus''. Four subspecies are recogni ...

. Weka is a popular data mining package for Java including WordVectors and Bag Of Words models.

* Word2vec

Word2vec is a technique for natural language processing (NLP) published in 2013. The word2vec algorithm uses a neural network model to learn word associations from a large corpus of text. Once trained, such a model can detect synonymous words or ...

. Word2vec uses vector spaces for word embeddings.

Further reading

* G. Salton (1962),Some experiments in the generation of word and document associations

''Proceeding AFIPS '62 (Fall) Proceedings of the December 4–6, 1962, fall joint computer conference'', pages 234–250. ''(Early paper of Salton using the term-document matrix formalization)'' * G. Salton, A. Wong, and C. S. Yang (1975),

A Vector Space Model for Automatic Indexing

''Communications of the ACM'', vol. 18, nr. 11, pages 613–620. ''(Article in which a vector space model was presented)'' * David Dubin (2004)

The Most Influential Paper Gerard Salton Never Wrote

''(Explains the history of the Vector Space Model and the non-existence of a frequently cited publication)''

* ttp://nlp.stanford.edu/IR-book/html/htmledition/vector-space-classification-1.html Relationship of vector space search to the "k-Nearest Neighbor" search

See also

References

{{reflist